Agentic-Chatbot (Duke)

Agentic AI chatbot that routes queries across curriculum, events, and places; augments with Pinecone RAG; powered by GPT-4o-mini via LangChain

Demo

Project Overview

This full-stack web app implements an agentic workflow for Duke University FAQs. A router agent dispatches queries to specialized agents (curriculum, events, locations). For curriculum-heavy questions, a Pinecone RAG index augments answers with scraped, offline Duke content. The backend is Flask on EC2; the frontend (React + Vite) connects via a WebSocket path through AWS Lambda.

Problem

- Prospective/incoming students need friendly, accurate answers about programs, events, and places.

- Single-agent chatbots struggle with domain routing and up-to-date institutional info.

- Answering curriculum details often requires grounded, searchable references.

Architecture & Solution

Router Agent

Central decision-maker with a system prompt that selects the right agent(s) and synthesizes cross-domain answers.

Specialized Agents

Curriculum, Events, and Locations agents inherit from a shared BaseAgent and wrap Duke API tools via LangChain.

RAG (Pinecone)

Offline scraping → paragraph-based chunking with sliding windows → embeddings → Pinecone namespaces with metadata for traceability.

Duke APIs

Typed service layer for curriculum, events, and places; subject codes sourced to data/metadata/subjects.json.

Infra

Flask on EC2; WebSocket path via AWS Lambda to the backend. GPT-4o-mini as the LLM through LangChain tools.

Technology Stack

Frontend

- React (Vite) · WebSocket client

Backend

- Flask on EC2 · AWS Lambda (edge filtering/routing)

Agents

- LangChain router + domain tools · GPT-4o-mini

RAG

- Pinecone · paragraph chunking + sliding window · metadata for citations

Evaluation

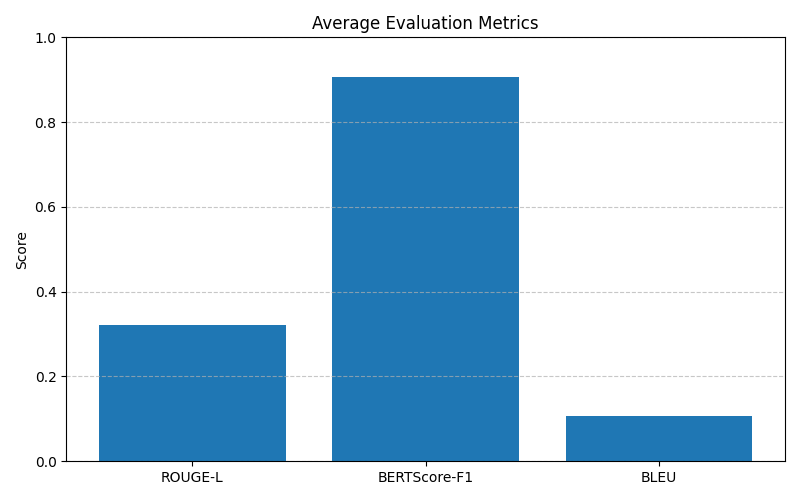

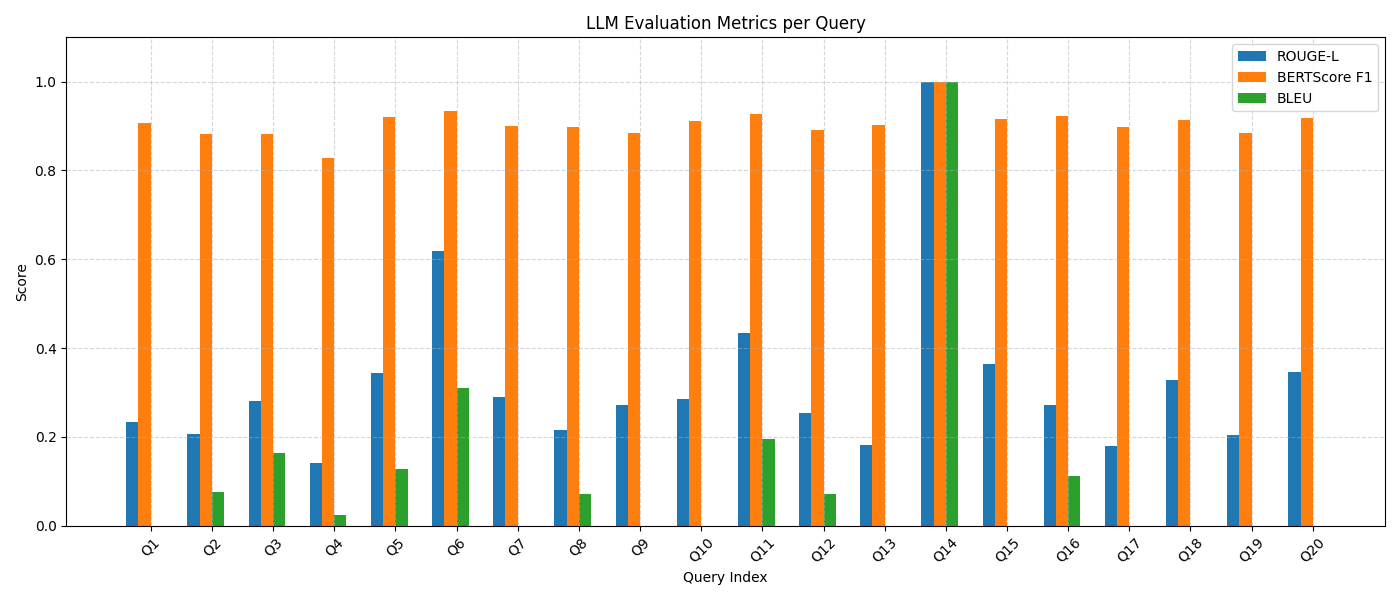

Automatic metrics on 20 benchmark questions (saved to llm_eval_results.csv): ROUGE-L, BERTScore-F1, BLEU.

A 5-user study rated answers for helpfulness/accuracy, avg 4.12/5.

Cost Minimization

- Selective agent invocation by the Router; parallel calls only when needed.

- Lambda pre-filters queries unrelated to Duke.

- GPT-4o-mini for strong perf at lower cost; prompt tuning.

- Shared memory across agents to reduce tokens; offline scraping + RAG for curriculum details.